bit of an info dump.

Using FFMPEG I convert a 3d SBS video to 640x640 in about 30 minutes and get a left or right eye video. I’m using cuda, and also it’s quicker if I start from a smaller resolution source, but I prefer to start from larger. yolo11’s native resolution is 640, you can go bigger but most of the time 640 is decent. Reencoding has been simpler than some of the more niche python+ffmpeg, or opencv+gpu, or torchvision decoding things Ive tried, which ultimately ends up taking the same time because the format switching need for yolo to process them, even when it stays on the gpu I haven’t gotten much efficiency. long.

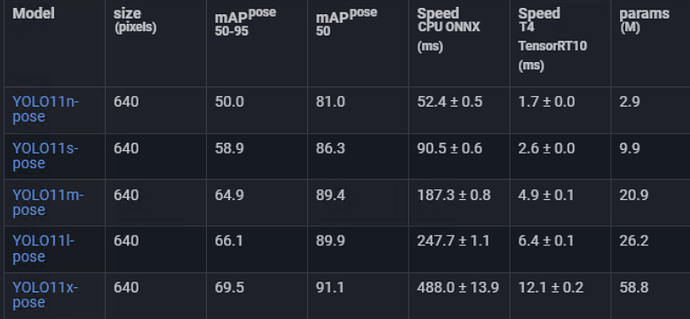

Once the video is at a good size, I can run every frame through a yolo11m-pose previously finetuned. Using batches of 120 frames it completes in about 90% of the video runtime. I run the predictions without tracking, minimum confidence 10% and an iou of 99%. I trim/pad the data for each frame out to 8 detections.

It’s then 60 predictions per second, for 60 seconds by 60 minutes. Each frame has 8 detections, some are blank. Each detection has a bounding box consisting of 2 xy coordinates, and 21 keypoints which have x, y coords and a confidence score.

That’s up to 60x60x60x8x67 points of data for an hour long video. That’s 115,776,000 data points.

If I just choose the most confident predictions from each frame, I can animate them into full video length animated gifs similar to the last one I posted. Everything goes out the window with more than one performer in a scene though.

With one performer I have multiple detections because of the low cfg and high iou I use, which was intentional so I could try things like finding the most similar pose in each frame based on total differences of all frames throughout the video, as well as other things like merging certain points or working the difference in points.

Ultimately it was all much the same outcome, and the maths hurt my head so instead I stuck to the most confident prediction. 21 lines correspond to 21 keypoints.

And then smoothed that with savgol filter

And then some more maths that the internet told me to extract peaks and troughs. The X axis looks trash but that’s expected.

I didn’t love the approach, but it can be a case of

- put video in folder

- press button.

- come back an hour later to an ugly graph and also a funscript file of that graph.

- Use a tool that doesn’t exist (OFS is great, but of course it doesn’t behave when I load in 21 funscripts) to scrub through the video and mark which keypoints to use for given time frames, which eventually assembles to make a draft funscript that could help someone make an actual good funscript.

And I feel like where I’d get with that isn’t much better that what other folks have already done in simpler, less complicated ways.

So instead I’d rather take my 115,776,000 data points and run them through a model to get a draft funscript. I also figured I’d do it with pytorch instead of tensorflow which I was used to which was an extra bit of learning as well as that it can be hard to even know if you’ve designed a model’s layers correctly, or stuffed up your code until the model actually learns something. I started with a model that can generate 120 predictions for a 120 frame sequence at once, because that seems to result in smoother outputs. The input data was yolo pose data I pulled from a from a few different videos that I had scripts for. I interpolated the funscripts with quadratic interpolation to create a data point for each frame.

I wasn’t training a model with the intent distributing the end result as it was done with funscripts I don’t have permissions to train on. But if it had proved the concept sufficiently I’d start training from scratch with permissable data.

This is an example of a prediction on unseen data.

Green is actual funscript.

Orange is the value the model predicted.

Blue is the Orange line normalized.

It was about a month ago when I took a break from it it. Work got busy. If I’m charitable, I’d say it was learning sequences from the pose data, there is some harmonic correlation visible between the green and orange line. But also I went back through the data and I was training on some sequences that were quite hard as a human to work out what they should be which probably wasn’t helping.

All that when I still don’t love this approach either :D. I’d prefer try working with the yolo model’s later layers as a feature extractor instead of just the pose data, but the layers are quite large and I’d have to compromise on sequence length for predictions or work out a good way to reduce their size first.

Either way, this is still a problem I come back to and bang my head against every so often.