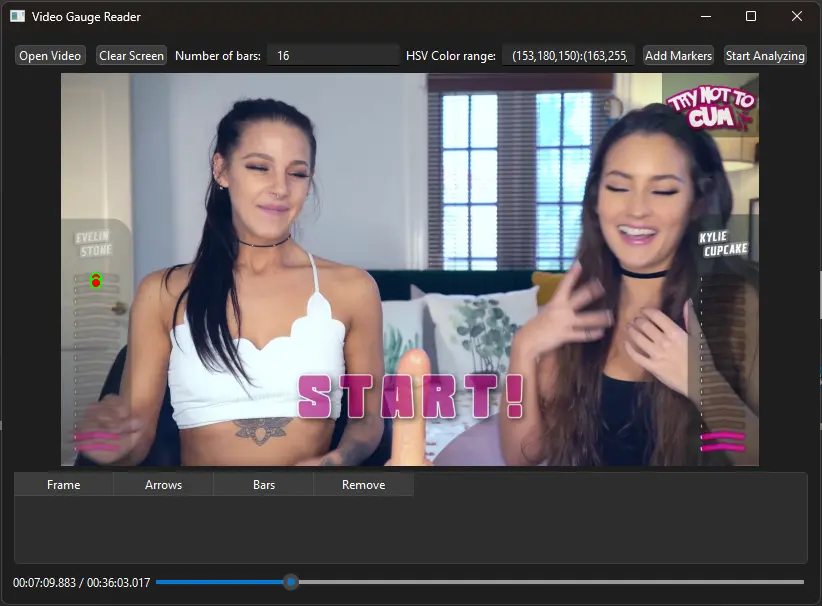

Thanks for your script, it inspired me to write another version and implement some improvements.

- replaced tkinter with PyQT6

- read multiple gauges

- support gauges changing position

- refine detection with color ranges in HSV

- configure number of points to read (to support different gauges than 16 bars)

- Display simulation in real time to show color readings and simulate detection

- Simplify points detection to only retain peaks/valleys/plateaus

I have completed 59 funscripts (using raw readings, with minimal cleaning in OFS to remove aberrations): https://discuss.eroscripts.com/t/the-jerk-off-games-try-not-to-cum-59-funscripts-opencv-gauge-readings/204137

import sys

import cv2

import numpy as np

import json

import logging

from PyQt6.QtWidgets import (

QApplication, QWidget, QPushButton, QLabel, QFileDialog, QSlider,

QHBoxLayout, QVBoxLayout, QLineEdit, QTableWidget, QTableWidgetItem,

QStyle, QTableView, QMessageBox, QAbstractItemView, QSizePolicy, QSplitter

)

from PyQt6.QtGui import QPixmap, QImage, QPainter, QPen, QColor, QMouseEvent, QPaintEvent

from PyQt6.QtCore import Qt, QPoint, QPointF, QRect, QSize, QTimer

# Set up logging

logging.basicConfig(level=logging.DEBUG, format='%(asctime)s %(levelname)s:%(message)s')

class VideoPlayerLabel(QLabel):

def __init__(self, parent=None):

super().__init__(parent)

self.setMouseTracking(True)

self.start_point = None

self.end_point = None

self.arrows = []

self.drawing = False

self.current_pixmap = None

self.points_of_interest = []

self.setScaledContents(False)

self.setSizePolicy(QSizePolicy.Policy.Expanding, QSizePolicy.Policy.Expanding)

self.setMinimumHeight(300)

def setPixmap(self, pixmap):

self.current_pixmap = pixmap

self.update()

def compute_scaling_factors(self):

if self.current_pixmap:

pixmap_width = self.current_pixmap.width()

pixmap_height = self.current_pixmap.height()

label_width = self.width()

label_height = self.height()

aspect_ratio_pixmap = pixmap_width / pixmap_height

aspect_ratio_label = label_width / label_height

if aspect_ratio_pixmap > aspect_ratio_label:

scale = label_width / pixmap_width

else:

scale = label_height / pixmap_height

scaled_width = pixmap_width * scale

scaled_height = pixmap_height * scale

x_scale = scale

y_scale = scale

x_offset = (label_width - scaled_width) / 2

y_offset = (label_height - scaled_height) / 2

return x_scale, y_scale, x_offset, y_offset

else:

return None, None, None, None

def mousePressEvent(self, event: QMouseEvent):

if event.button() == Qt.MouseButton.LeftButton and self.current_pixmap:

x_scale, y_scale, x_offset, y_offset = self.compute_scaling_factors()

mouse_x = event.position().x()

mouse_y = event.position().y()

image_x = (mouse_x - x_offset) / x_scale

image_y = (mouse_y - y_offset) / y_scale

if 0 <= image_x < self.current_pixmap.width() and 0 <= image_y < self.current_pixmap.height():

self.start_point = QPointF(image_x, image_y)

self.drawing = True

def mouseMoveEvent(self, event: QMouseEvent):

if self.drawing and self.current_pixmap:

x_scale, y_scale, x_offset, y_offset = self.compute_scaling_factors()

mouse_x = event.position().x()

mouse_y = event.position().y()

image_x = (mouse_x - x_offset) / x_scale

image_y = (mouse_y - y_offset) / y_scale

self.end_point = QPointF(image_x, image_y)

self.update()

def mouseReleaseEvent(self, event: QMouseEvent):

if event.button() == Qt.MouseButton.LeftButton and self.drawing and self.current_pixmap:

x_scale, y_scale, x_offset, y_offset = self.compute_scaling_factors()

mouse_x = event.position().x()

mouse_y = event.position().y()

image_x = (mouse_x - x_offset) / x_scale

image_y = (mouse_y - y_offset) / y_scale

self.end_point = QPointF(image_x, image_y)

self.arrows.append((self.start_point, self.end_point))

self.start_point = None

self.end_point = None

self.drawing = False

self.update()

def paintEvent(self, event: QPaintEvent):

painter = QPainter(self)

if self.current_pixmap:

x_scale, y_scale, x_offset, y_offset = self.compute_scaling_factors()

# Scale the pixmap to fit the label while keeping the aspect ratio

scaled_pixmap = self.current_pixmap.scaled(

self.size(), Qt.AspectRatioMode.KeepAspectRatio, Qt.TransformationMode.SmoothTransformation

)

# Center the pixmap

x = x_offset

y = y_offset

# Cast x and y to integers

painter.drawPixmap(int(x), int(y), scaled_pixmap)

# Adjust arrows and points according to scale

pen = QPen(QColor(0, 255, 0), 2)

painter.setPen(pen)

# Draw existing arrows

for start, end in self.arrows:

scaled_start = QPointF(

start.x() * x_scale + x_offset,

start.y() * y_scale + y_offset

)

scaled_end = QPointF(

end.x() * x_scale + x_offset,

end.y() * y_scale + y_offset

)

painter.drawLine(scaled_start, scaled_end)

painter.setBrush(QColor(255, 0, 0))

painter.drawEllipse(scaled_start, 5, 5)

painter.drawEllipse(scaled_end, 5, 5)

# Draw current arrow

if self.drawing and self.start_point and self.end_point:

scaled_start = QPointF(

self.start_point.x() * x_scale + x_offset,

self.start_point.y() * y_scale + y_offset

)

scaled_end = QPointF(

self.end_point.x() * x_scale + x_offset,

self.end_point.y() * y_scale + y_offset

)

painter.drawLine(scaled_start, scaled_end)

painter.setBrush(QColor(255, 0, 0))

painter.drawEllipse(scaled_start, 5, 5)

painter.drawEllipse(scaled_end, 5, 5)

# Draw points of interest

for point in self.points_of_interest:

painter.setPen(QPen(QColor(*point[3]), 5))

scaled_point = QPointF(

point[0] * x_scale + x_offset,

point[1] * y_scale + y_offset

)

painter.drawPoint(scaled_point)

# Get the label for the point

label = point[2]

# Determine the position for the label

label_x = scaled_point.x() - 100 - painter.fontMetrics().horizontalAdvance(label) # Default to left side

label_y = scaled_point.y()

# Check if there's enough space on the left

if label_x < 0:

label_x = scaled_point.x() + 100 # Move to right side

# Draw the label

painter.drawText(QPointF(label_x, label_y), label)

painter.end()

class MainWindow(QWidget):

def __init__(self):

super().__init__()

self.setWindowTitle("Video Gauge Reader")

self.video_path = None

self.total_frames = 0

self.current_frame = 0

self.markers = {}

self.analysis_timer = None

self.analysis_results = {}

self.cap = None

self.is_analyzing = False

self.init_ui()

def init_ui(self):

# Video display area

self.video_label = VideoPlayerLabel()

self.video_label.setSizePolicy(QSizePolicy.Policy.Expanding, QSizePolicy.Policy.Expanding)

self.video_label.setMinimumHeight(300)

# Buttons

self.open_button = QPushButton("Open Video")

self.clear_button = QPushButton("Clear Screen")

self.add_markers_button = QPushButton("Add Markers")

self.start_analysis_button = QPushButton("Start Analyzing")

# Frame indicator

self.frame_indicator = QLabel("0 / 0")

# Slider

self.frame_slider = QSlider(Qt.Orientation.Horizontal)

self.frame_slider.setEnabled(False)

# Number of bars

self.num_bars_edit = QLineEdit("16")

self.num_bars_label = QLabel("Number of bars:")

# Color range input

self.color_range_edit = QLineEdit("(0,0,0):(255,255,255)")

self.color_range_label = QLabel("HSV Color range:")

# Markers table

self.markers_table = QTableWidget()

self.markers_table.setColumnCount(4)

self.markers_table.setHorizontalHeaderLabels(["Frame", "Arrows", "Bars", "Remove"])

self.markers_table.setEditTriggers(QAbstractItemView.EditTrigger.NoEditTriggers)

# Create a splitter to hold the video and the table

splitter = QSplitter(Qt.Orientation.Vertical)

splitter.addWidget(self.video_label)

splitter.addWidget(self.markers_table)

splitter.setSizes([400, 200]) # Adjust initial sizes as needed

# Layouts

controls_layout = QHBoxLayout()

controls_layout.addWidget(self.open_button)

controls_layout.addWidget(self.clear_button)

controls_layout.addWidget(self.num_bars_label)

controls_layout.addWidget(self.num_bars_edit)

controls_layout.addWidget(self.color_range_label)

controls_layout.addWidget(self.color_range_edit)

controls_layout.addWidget(self.add_markers_button)

controls_layout.addWidget(self.start_analysis_button)

frame_layout = QHBoxLayout()

frame_layout.addWidget(self.frame_indicator)

frame_layout.addWidget(self.frame_slider)

main_layout = QVBoxLayout()

main_layout.addLayout(controls_layout)

main_layout.addWidget(splitter)

main_layout.addLayout(frame_layout)

self.setLayout(main_layout)

# Connections

self.open_button.clicked.connect(self.open_video)

self.clear_button.clicked.connect(self.clear_screen)

self.add_markers_button.clicked.connect(self.add_markers)

self.start_analysis_button.clicked.connect(self.toggle_analysis)

self.frame_slider.valueChanged.connect(self.slider_moved)

self.markers_table.cellClicked.connect(self.remove_marker)

self.color_range_edit.editingFinished.connect(self.update_color_range)

# Initialize the thresholds

self.lower_color_range = np.array([0, 0, 0])

self.upper_color_range = np.array([255, 255, 255])

def open_video(self):

file_name, _ = QFileDialog.getOpenFileName(self, "Open Video File", "", "Video Files (*.mp4 *.avi *.mov)")

if file_name:

self.video_path = file_name

self.cap = cv2.VideoCapture(self.video_path)

self.total_frames = int(self.cap.get(cv2.CAP_PROP_FRAME_COUNT))

self.fps = self.cap.get(cv2.CAP_PROP_FPS)

self.total_duration_ms = (self.total_frames / self.fps)*1000

self.total_duration_str = self.ms_to_time(self.total_duration_ms)

if self.total_frames <= 0:

logging.error("Video has zero frames or failed to read total frames.")

QMessageBox.critical(self, "Error", "Failed to read total frames from video.")

self.cap.release()

return

logging.debug(f"Opened video {self.video_path} with total_frames={self.total_frames}")

self.frame_slider.setMaximum(self.total_frames - 1)

self.frame_slider.setEnabled(True)

self.load_frame(0)

def load_frame(self, frame_number):

if self.cap.isOpened():

self.cap.set(cv2.CAP_PROP_POS_FRAMES, frame_number)

ret, frame = self.cap.read()

self.frame = frame

if ret:

self.current_frame = frame_number

self.display_frame(frame)

self.frame_indicator.setText(f"{self.ms_to_time(self.cap.get(cv2.CAP_PROP_POS_MSEC ))} / {self.total_duration_str}")

self.frame_slider.setValue(self.current_frame)

else:

logging.error(f"Failed to read frame {frame_number}.")

def display_frame(self, frame):

rgb_image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

h, w, ch = rgb_image.shape

bytes_per_line = ch * w

qt_image = QImage(rgb_image.data, w, h, bytes_per_line, QImage.Format.Format_RGB888)

pixmap = QPixmap.fromImage(qt_image)

# Set the pixmap in the video label

self.video_label.setPixmap(pixmap)

# Update points of interest

self.update_points_of_interest()

def clear_screen(self):

self.video_label.arrows = []

self.video_label.update()

def add_markers(self):

try:

num_bars = int(self.num_bars_edit.text())

except ValueError:

QMessageBox.warning(self, "Warning", "Number of bars must be an integer.")

return

# Store markers

self.markers[self.current_frame] = {

'arrows': [ {'coordinates':(arrow[0], arrow[1]),

'points_of_interest': self.calculate_points((arrow[0].x(), arrow[0].y()), (arrow[1].x(), arrow[1].y()), num_bars),

'distance': arrow[1].y() - arrow[0].y(),

'ref_y': arrow[1].y()}

for arrow in self.video_label.arrows ],

'num_bars': num_bars,

}

logging.debug(f"Added markers at frame {self.current_frame}: {self.markers[self.current_frame]}")

# Update table

self.update_markers_table()

# Clear arrows

self.video_label.arrows = []

self.video_label.update()

# Update points of interest

self.update_points_of_interest()

def update_markers_table(self):

self.markers_table.setRowCount(0)

for idx, frame_number in enumerate(sorted(self.markers.keys())):

arrows_count = len(self.markers[frame_number]['arrows'])

num_bars = self.markers[frame_number]['num_bars']

self.markers_table.insertRow(idx)

self.markers_table.setItem(idx, 0, QTableWidgetItem(str(frame_number)))

self.markers_table.setItem(idx, 1, QTableWidgetItem(str(arrows_count)))

self.markers_table.setItem(idx, 2, QTableWidgetItem(str(num_bars)))

remove_button = QPushButton("X")

self.markers_table.setCellWidget(idx, 3, remove_button)

remove_button.clicked.connect(lambda _, row=idx: self.delete_marker(row))

def delete_marker(self, row):

frame_number = int(self.markers_table.item(row, 0).text())

del self.markers[frame_number]

logging.debug(f"Deleted markers at frame {frame_number}")

self.update_markers_table()

self.update_points_of_interest()

def remove_marker(self, row, column):

if column == 3:

self.delete_marker(row)

def slider_moved(self, value):

if not self.is_analyzing or (value % 1000 == 0):

self.load_frame(value)

def toggle_analysis(self):

if self.start_analysis_button.text() == "Start Analyzing":

if not self.markers:

QMessageBox.warning(self, "Warning", "No markers to analyze.")

return

self.start_analysis_button.setText("Stop Analyzing")

self.is_analyzing = True

self.analysis_results = {}

# Start from the first marker frame

first_marker_frame = min(self.markers.keys())

self.current_frame = first_marker_frame

self.cap.set(cv2.CAP_PROP_POS_FRAMES, first_marker_frame)

# Start a QTimer to process frames incrementally

self.analysis_timer = QTimer()

self.analysis_timer.timeout.connect(self.process_next_frame)

self.analysis_timer.start(0) # Process as fast as possible without blocking the GUI

logging.debug(f"Analysis started from frame {first_marker_frame}.")

else:

self.is_analyzing = False

if self.analysis_timer:

self.analysis_timer.stop()

self.start_analysis_button.setText("Start Analyzing")

logging.debug("Analysis stopped.")

# Prompt to save results

self.save_results(self.analysis_results)

def process_next_frame(self):

if not self.is_analyzing or self.current_frame >= self.total_frames:

self.analysis_finished()

return

ret, frame = self.cap.read()

if not ret:

logging.warning(f"Failed to read frame {self.current_frame}. Ending analysis.")

self.analysis_finished()

return

#logging.debug(f"Processing frame {self.current_frame}")

# Update the progress indicator

self.frame_indicator.setText(f"{self.ms_to_time(self.cap.get(cv2.CAP_PROP_POS_MSEC ))} / {self.total_duration_str}")

self.frame_slider.setValue(self.current_frame)

# Process the frame

self.analyze_current_frame(frame)

self.current_frame += 1

def update_color_range(self):

try:

input_text = self.color_range_edit.text()

lower, upper = input_text.split(':')

# Parse lower range

lower_b, lower_g, lower_r = map(int, lower.strip('()').split(','))

# Parse upper range

upper_b, upper_g, upper_r = map(int, upper.strip('()').split(','))

# Ensure values are within valid range (0-255)

self.lower_color_range = np.array([

max(0, min(lower_b, 255)),

max(0, min(lower_g, 255)),

max(0, min(lower_r, 255))

])

self.upper_color_range = np.array([

max(0, min(upper_b, 255)),

max(0, min(upper_g, 255)),

max(0, min(upper_r, 255))

])

logging.debug(f"Color ranges updated - Lower: {self.lower_color_range}, Upper: {self.upper_color_range}")

except (ValueError, IndexError):

QMessageBox.warning(self, "Invalid Input", "Please enter valid color range in the format (b,g,r):(b,g,r)")

def analyze_current_frame(self, frame):

try:

marker_frame_numbers = sorted([f for f in self.markers.keys()])

applicable_frame_number = max([f for f in marker_frame_numbers if f <= self.current_frame], default=None)

if applicable_frame_number is not None:

marker_data = self.markers[applicable_frame_number]

if not marker_data['arrows']:

return

ref = marker_data['arrows'][0]

pois = list(zip(*[x['points_of_interest'] for x in marker_data['arrows']]))

self.current_time_ms = self.cap.get(cv2.CAP_PROP_POS_MSEC)

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

for x, y, _, _ in [p for l in pois for p in l if p]:

color = np.array(hsv[y, x])

if np.all((color >= self.lower_color_range) & (color <= self.upper_color_range)):

y_distance = round(((ref['ref_y'] - y)/ref['distance'])*100)

#logging.debug(f"{self.ms_to_time(self.current_time_ms)}: y_distance = {y_distance})")

self.analysis_results[round(self.current_time_ms)] = y_distance

return

except Exception as e:

logging.exception(f"Exception during frame analysis: {e}")

QMessageBox.critical(self, "Error", f"An error occurred during analysis:\n{e}")

self.is_analyzing = False

if self.analysis_timer:

self.analysis_timer.stop()

self.start_analysis_button.setText("Start Analyzing")

def simplify_results(self, data, plateau_threshold=300):

n0, n1, n2 = data[0], data[1], (0,0)

results = [n0]

is_plateau = False

for x in data[1:]:

if x[1] == n1[1]:

n2 = x

is_plateau = True

elif (n0[1] < n1[1] < x[1]) or (n0[1] > n1[1] > x[1]):

if is_plateau and (n2[0]-n1[0]) > plateau_threshold:

results += [n1,n2]

n0, n1 = n2, x

else:

n0, n1 = n1, x

is_plateau = False

else:

if is_plateau:

plateau_length = (n2[0]-n1[0])

if plateau_length > plateau_threshold:

results += [n1, n2]

elif plateau_length > 1:

frame_number, value = (round(n2[0]-plateau_length/2), n2[1])

results.append((frame_number, value))

n0, n1 = n2, x

else:

results.append(n1)

n0, n1 = n1, x

is_plateau = False

if data[-1][1] != results[-1][1]:

results.append(data[-1])

return {"version":"1.0", "inverted": False, "range":90,

"actions": [{"at":x, "pos": y} for x, y in results]}

def ms_to_time(self, millis):

seconds = millis / 1000

minutes, seconds = divmod(seconds, 60)

hours, minutes = divmod(minutes, 60)

return f"{hours:02.0f}:{minutes:02.0f}:{seconds:06.3f}"

def analysis_finished(self):

if self.is_analyzing:

self.is_analyzing = False

if self.analysis_timer:

self.analysis_timer.stop()

self.start_analysis_button.setText("Start Analyzing")

logging.debug("Analysis finished.")

# Prompt to save results

self.save_results(self.analysis_results)

else:

# Analysis was stopped by user

if self.analysis_timer:

self.analysis_timer.stop()

self.start_analysis_button.setText("Start Analyzing")

logging.debug("Analysis stopped by user.")

# Prompt to save results

self.save_results(self.analysis_results)

def save_results(self, results):

if not results:

QMessageBox.information(self, "No Results", "No results to save.")

return

logging.debug("Saving results.")

file_name, _ = QFileDialog.getSaveFileName(self, "Save Results", "", "Funscript Files (*.funscript)")

if file_name:

with open(file_name, 'w') as f:

json.dump(self.simplify_results(list(results.items())), f)

#json.dump(results, f, indent=4)

QMessageBox.information(self, "Success", "Results saved successfully.")

logging.debug(f"Results saved to {file_name}")

def show_error(self, error_message):

logging.error(f"Error during analysis: {error_message}")

QMessageBox.critical(self, "Error", f"An error occurred during analysis:\n{error_message}")

def calculate_points(self, start_pt, end_pt, num_points):

points = []

x_spacing = (end_pt[0] - start_pt[0]) / (num_points - 1)

y_spacing = (end_pt[1] - start_pt[1]) / (num_points - 1)

for i in range(num_points):

x = start_pt[0] + i * x_spacing

y = start_pt[1] + i * y_spacing

hsv = cv2.cvtColor(self.frame, cv2.COLOR_BGR2HSV)

color = hsv[int(y), int(x)]

label = str(color)

label_color = (0,255,0) if np.all((color >= self.lower_color_range) & (color <= self.upper_color_range)) else (255,0,0)

points.append((int(x), int(y), label, label_color))

return points

def update_points_of_interest(self):

try:

# Clear previous points

self.video_label.points_of_interest = []

# Determine applicable markers

applicable_frame_numbers = sorted([f for f in self.markers.keys() if f <= self.current_frame])

if applicable_frame_numbers:

marker_frame = applicable_frame_numbers[-1]

marker_data = self.markers[marker_frame]

for arrow in marker_data['arrows']:

start, end = arrow['coordinates']

points = self.calculate_points((start.x(), start.y()), (end.x(), end.y()), marker_data['num_bars'])

self.video_label.points_of_interest.extend(points)

self.video_label.update()

except Exception as e:

logging.exception(f"Exception in update_points_of_interest: {e}")

self.show_error(f"An error occurred during analysis:\n{e}")

if __name__ == "__main__":

try:

app = QApplication(sys.argv)

window = MainWindow()

window.show()

sys.exit(app.exec())

except Exception as e:

logging.exception("Unhandled exception occurred in the application")