Creating this since I get asked a lot what my process is for video cleaning.

The funny history behind this is - I joined this forum having no idea what to expect and no intention to participate. So when I picked my username I just mashed on my keyboard. First 5 characters happened to be g90ak.

Fast forward a few months and I picked up a Handy and was really starting to appreciate the community that was built here and wanted to begin giving back in some way - I didn’t have enough time to script myself unfortunately, but did have electricity and good GPUs. Asked the mods to shorten my name, whipped up a quick avatar, and got to work.

The term “g90ak’ed” was literally a personal tag for me to keep track of what I processed so I wouldn’t get confused on my hard drive between original and processed versions. I started sharing with the community and things have just kinda gone from here.

There is no secret sauce to my processed videos, My workflow consists of:

- Avisynth - cropping, color correction, levels correction, deinterlacing, edge enhancement. I mostly use functions of SmoothTweak, Hysteria, QTGMC, Smoothlevels, AutoLevels, Spline16Resize, and some more for special use cases.

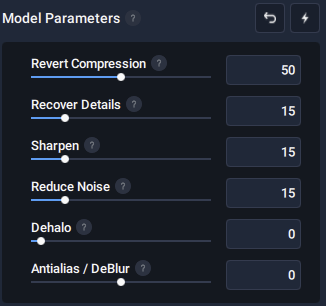

- Topaz Video Enhance AI 2.6.4 - upscaling using Artemis HQ for live video or Proteus at 50/15/15/15/0/0 for anime or CGI content. Add grain off, and export into a lossless .mp4 container. The other models aren’t worth messing around with. Try not to run multiple AI models in series either - it doesn’t help things.

- Flowframes - Framedoubling/HFR’ing. For 4K and under videos, export at h.264 with a CRF quality of 19. Set scene changes to “duplicate last frame” Use RIFE model 2,3 (slowest, but least amount of artifacts)

- Xmedia Recode - Simply to rejoin the original clip’s audio to the processed video

For VR videos, I skip Flowframes since they’re already 50-60fps, and use Ripbot264 to encode into a h.265 .mp4 container. I use a single pass of CRF 19 to encode. Do not use h.264 with VR videos - most hardware acceleration won’t work, making the video very hard to play back.

That’s it. There is no strict process I follow, as each video needs its own settings based on inherent issues or lack thereof. Things I take into consideration are digital noise, issues with white balance, logos that will interfere with framedoubling, compression artifacts, etc.

This is a very CPU and GPU intensive process. My rig will consume between 350-550 watts continuously while processing a video. A half hour video can take between 5-8 hours depending on complexity (10th gen i9 @ 5ghz + 3080RTX) . I would not recommend getting into this without at least an 6th gen i7 CPU and a 1080ti unless you’re only doing clips of 5 min or shorter.

Learning how to use Avisynth is the hardest part. There’s a good wiki and some tutorials out there. I won’t be able to recreate it here though. You can skip it and get results that are pretty good. However, that’s really the big difference between my versions and most of the others.