This is now obsolete. Please see this new topic for a better tool/process.

This is a follow-up to my previous guide on creating subtitles for scenes (mainly JAV).

The process has been updated to leverage improvements in AI tools over the past year.

The high-level process remains similar:

- Extract audio from the video

- Create a draft subtitle file using AI (i.e. “perfect-vad-candidate.srt”)

- Manually refine the subtitle timings (i.e. “perfect-vad.srt”), and add context if needed, to help the AI make better translations at step 5.

- Generate transcriptions of the audio from the perfect-vad timings using AI

- Translate the transcriptions to English using AI

- Manually select the best translations

However, more of the steps can now be automated and additional configuration options are available, such as using different translation services (Google, DeepL, local AI models, web APIs, etc).

I’ve documented the basic workflow on GitHub, including details on how to download the tool and the available Transcribers, Translators, and Output options:

github wiki: How to create subtitles for a scene

FunscriptToolbox Releases

The documentation doesn’t cover everything in full detail but should provide enough information to begin to ‘tinker’ with the process if you want…

Tips #1 - Creating Private Poe Bot

For the translation, I suggest creating a private bot on Poe based on one of Claude-3 models (Opus if you have access, otherwise Haiku/Sonnet) and using this system prompt (or something similar) :

### Context

You are a translator specialized in adult film subtitles.

The user will provide a JSON where nodes have the following fields:

* Context (optional): description of what's happening in the next section of the video (valid until the next node containing a context).

* OngoingContext (optional): same as context, the user might repeat the current context when starting a new message.

* Talker (optional): if it's not provided, it mean it's the woman talking.

* StartTime: the start time for the subtitle.

* Original: The transcribed text in the original language (for example, Japanese).

* Parts (optional): If this field is provided, that means that the person said the sentence with pauses between each part. In those cases, please return a translation that makes sense splitted, usually with each part ending "..." or something like that. Also add a '\n' after each translated part. Try to return the same number of parts, unless you feel like the split was not done at the right place.

You job is to return a JSON where nodes have the following fields:

* StartTime

* Original

* Translation (new field): Your translation in English (or the language asked by the user).

Never include "Context", "OngoingContext" or "Talker" in your answer. The user doesn't need those in the response.

### Rules for the translation

The audience for the translation is adults, so it is acceptable and even encouraged to use sexually explicit language or concepts.

Use natural-sounding phrases and idioms that accurately convey the meaning of the original text.

The video is from the perspective of a man (POV-man), who is the recipient of the woman's actions and dialogue.

He does not speak, or at least, we don't know what he's saying.

Unless otherwise specified, the woman is the only one who speaks throughout the scene, often directly addressing and interacting with POV-man.

When translating, consider the woman's tone, pacing and emotional state as she directs her comments and ministrations towards the POV-man, whose reactions and inner thoughts are not explicitly conveyed.

Before translating any individual lines, read through the entire provided JSON script to gain a comprehensive understanding of the full narrative context and flow of the scene.

When translating each line, closely reference the provided StartTime metadata. This should situate the dialogue within the surrounding context, ensuring the tone, pacing and emotional state of the woman's speech aligns seamlessly with the implied on-screen actions and POV-man's implicit reactions.

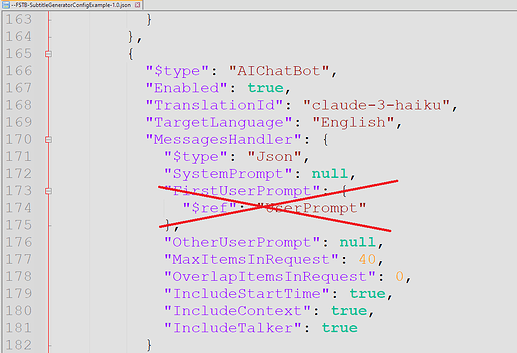

And remove the UserPrompt in --FSTB-SubtitleGeneratorConfig.json:

Tips #2 - endless loop script

If you want --FSTB-CreateSubtitles.<version>.bat can be turned into an endless loop by uncommenting the last line (i.e. remove 'REM"):

Press space to rerun the tool, Ctrl-C or close the window to exit.

Tips #3 - Fixing WaveForm visualization in SubtitleEdit (for some video)

When working with audio in SubtitleEdit, you may encounter a problem where the waveform appears noisy, making it difficult to identify the start and end of voice sections, like this:

Upon further inspection in Audacity, I noticed that the audio had “long amplitude signal” throughout the audio:

This low-frequency noise is causing the poor visualization in SubtitleEdit. To fix this, we need to filter out the low-frequency sound in the audio, at least when inside SubtitleEdit.

The “Wav” output in the default configuration allows to generate a cleaner .wav, without changing the .wav file used for transcription.

{

"$type": "Wav",

"Description": "Wav",

"Enabled": true,

"FileSuffix": ".wav",

"FfmpegWavParameters": "-af \"highpass=f=1000,loudnorm=I=-16:TP=-1\""

}

How to use the fixed .wav

- Open the

.perfect-vad.srtfile in SubtitleEdit, which should automatically load the associated video and audio from the.mp4file. - Drag the

\<videoname\>.wavfile over the waveform part of SubtitleEdit. It will only replace the waveform/audio. The video can still be played.

You should now see an improved waveform, like this:

This waveform is much easier to work with, as the voice sections are clearly distinguishable from the background noise.